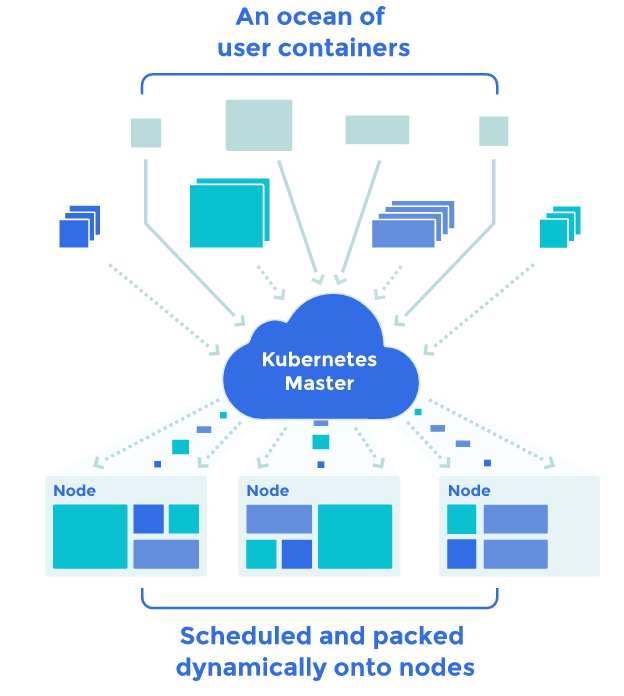

Kubernetes is an open-source system for automagically managing your devops. The official term Kubernetes uses is production-grade container orchestration. It automates deployment and scaling and was developed by Google to help manage its applications.

Kubernetes then packages groups of containers, which contain one or more of your applications, into what they call Pods. A Pod is managed by Kubernetes and its containers are deployed, started, stopped and replicated together as a group.

Ultimately, these all run on a Cluster which is a collection of Nodes. Each node is a virtual machine or physical computer. There is a Master node that manages all your applications desired state, scheduling of applications, rolling out updates and scaling. When you want to deploy applications, you tell Kubernetes to update its desired state and provide it with containerized applications.

The Glue

The glue which connects Pods, containers and your cluster are services. Pods, containing instances of your application, are ephemeral — they live and die as Kubernetes sees fit. Each Pod is assigned an IP address, but those two will change as Pods come and go on your cluster. A Kubernetes Service defines sets of pods and rules about how you can access them.

Additionally, there are many types of controllers that are responsible for Pod and Service management. The Controller you are likely to become most intimately familiar with is a Deployment Controller. You create Deployment objects which represent the desired state of your cluster and applications, and the Deployment Controller attempts to change the current state of your cluster into the desired state.

Kubernetes API and kubectl

The way in which you interact with the Kubernetes API is via .yaml configurations which describe the desired state of your application. These describe various things: the context and configuration of your cluster, individual Pods and their configuration, how to deploy those Pods, how they can interact with each other and the network, etc, etc

You can define simple and complex rules around scaling, packaging and deployment. This may sound like a daunting step since the Kubernetes API is so robust; however, they have conveniently provided your main tool when dealing with Kubernetes: kubectl. kubectl is a CLI for interacting with the Kubernetes API as well as a templating tool for creating various resources you will commonly need. With a few simple commands, you can create and run your first deployment and dig into the details at your own pace.

Doesn’t <XYZ> do this?

Whether you’re talking about Amazon AWS, Microsoft Azure, Digital Ocean and more’s IaaS platforms, the answer is simple: yes. All of those platforms provide similar levels of functionality that you can get without learning a new platform like Kubernetes.

The difference lies in that Kubernetes is an abstraction which can be used in conjunction with all of those cloud providers. What Kubernetes provides then is extensibility, portability and common interface for handling scaling and deployment.

The exciting part is that Kubernetes is open-source software and a one-stop shop for doing devops. You can extend it as you see fit and put it on whatever you’d like: from any cloud provider to your own bare metal. If AWS decides to double their price for using Elastic Beanstalk — that’s fine! If you get a better deal from a competing cloud service: that’s fine! If you decide you have a need for your own physical hardware: that’s fine! Nothing changes, and you get a seamless transition in your stack without having to worry, “Will it scale? Will it work?”

To continue learning about Kubernetes, check out our other blogs in the series:

Deploying a Full-Stack Dart Application on Google Cloud Using Docker and Kubernetes

SSL Load Termination and Load Balancing in Kubernetes Clusters

Erik Rahtjen

Erik Rahtjen