In a recent iOS Dev Weekly newsletter, Dave Verwer mentioned a familiar sentiment: “Unfortunately I’ve not yet managed to get very excited about AR myself, mainly due to the fact that today we must view the AR world through a tiny screen held in our hands [….] but I have been keeping an eye out for projects that could change my mind about it.” I remember I felt similarly not that that long ago.

We see a lot of novel and fun Augmented Reality projects, but not many leveraged for practical business cases. I would like to share a business case from a massive enterprise that’s investing in software to automate internal workflows with a computer vision mobile app and AR features and is showing the true business value of AR.

The company is a huge, Fortune 500 billion-dollar well-oiled machine that manufactures its own products. Like most companies of its size and scale, the large enterprise has risk-management and change control processes to ensure these evolutions happen incrementally with minimal risk. A fully-automated solution likely requires many new technologies and processes, and switching all at once would be far too risky. Instead you often introduce these technologies one by one while still having a human in charge thus creating a hybrid, semi-human, semi-automated approach. This approach helps validate the technologies involved and fleshes out the processes and edge-cases. It’s during this time these technologies assist the user with tasks until they’re ready to fully perform task the entirely their own. It’s this hybrid phase where we likely find many great AR use cases.

Computer Vision in Production

During a normal work day, the company leverages thousands of barcodes in logistics operations for asset tracking and proof of delivery. Over time, the enterprise realized traditional barcode scanning is time consuming and inefficient for its employees. It felt the first step toward a more automated process would be transitioning from traditional laser barcode scanners to optical scanners that leverage computer vision via mobile app. This app leverages the camera and a combination of native and third-party computer vision technologies. It also offers a modest performance enhancement that justifies the application in the short term.

So how does this app work? In many cases, assets are expressed as multiple barcodes on a particular label (e.g. one for product name, one for serial number, etc). Traditionally while receiving the product, employees would use a laser scanner to scan each barcode individually. But with this new application, employees can scan all the barcodes on a label at once while speeding up the process. However, the real strategic importance of switching to optical scanning is the doors it opens to advanced technologies such as computer vision, machine learning, augmented reality, and ultimately automation. We know a fully-automated solution will likely use optical sensors to map out the real world. Switching the handheld scanners from laser to optical during this hybrid approach ensures current camera hardware and detection software is ready for prime time. In addition, it allows the client to gather training datasets for machine learning algorithms that are invaluable for the eventual transition to full automation. In this case, every time a user scans a label, it captures another example of what a “label” is and creates the potential to generate thousands of samples that can be used to train the machine learning algorithm. Now that we have a mobile app leveraging computer vision, we can also easily add augmented reality features.

Setting up for Success with AR

It did not take long for the business to find the first problem that was well suited for an AR solution. The use case is modest and simple compared to the typical AR videos circulating social media, but it provides real value to the customer. It enables workers to quickly find items they missed when receiving product. Imagine a busy warehouse with a bunch of bays where semi-trucks dock up and forklifts move pallets full of product from these trucks into the warehouse. These pallets all have labeled containers enabling the receiver to easily scan them to verify receipt. Warehouse employees walk around these pallets scanning the labels on each container. This is our user. Our user is human. And in typical human fashion sometimes they make mistakes.

One common mistake is when the user misses scanning one of the labels while receiving the product. They usually don’t find out until the end of the process when they walk over to the receiving desk to realize they were expecting more of product X than what was scanned in. Sometimes the shipment is indeed short, but most of the time there is a label that was missed during scanning. So now the user has to go find that label they overlooked. Many times, they have to start over with the grid scanning one by one until they find the one they missed. Many users anticipate the oversight and carry a marker to visibly mark each label they scan to ensure it’s been addressed which can be tedious.

As you can imagine, this kind of process is inefficient, wastes employee time that could be spent elsewhere and is still error prone. A fully-automated solution would avoid this mistake all together, but while they are still in this hybrid phase where humans are involved, augmented reality is a great solution. As we discussed earlier, besides the modest performance increase from scanning multiple barcodes simultaneously, one of the major benefits of creating the mobile app was to open the doors for augmented reality features. It became clear that an AR “marker” feature would be a great solution. The premise is simple: place a green virtual marker on every label scanned. If the app detects the label but it is not successfully scanned, a red marker is placed on the label instead.

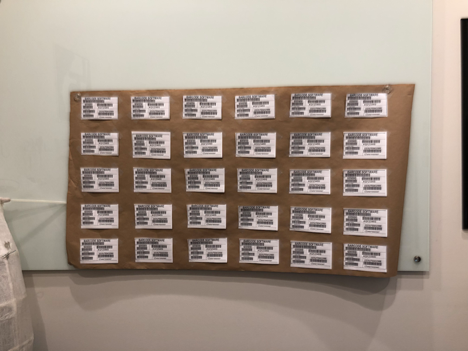

This is my “pallet simulator” where I have 30 test labels arranged in a grid. This is what it looks like before scanning:

An actual shipment may have something like 15 of these labels. It’s easy to see how trying to find the one missed label could be a tedious task; however, the AR feature makes this a breeze. You simply have to walk around taking a brief look to see if you see either a hole where no label was detected or a red marker where a label was detected but failed to scan. This is what it looks like from the app:

The customer is still in the early stages of vetting technologies, and this was an interesting proof of concept. I look forward to seeing more innovative projects coming from them in the future. I’ve hopefully shed some light on how we might find many AR use cases in the “hybrid” approach companies will take on the path toward automation. A lot of people have difficulty imagining AR technologies providing real value on mobile, but I hope that sharing this use-case will excite people and inspire more interesting business cases for AR. The more we share and the more people are inspired to make their own projects a reality, the more the value of AR in business will become clear. I’d love for you to share the AR use cases that get you excited so let me know on twitter at @stablekernel.

Jon Day

Jon Day